Missing Link Electronics

NPAP Evaluation Reference Design Overview

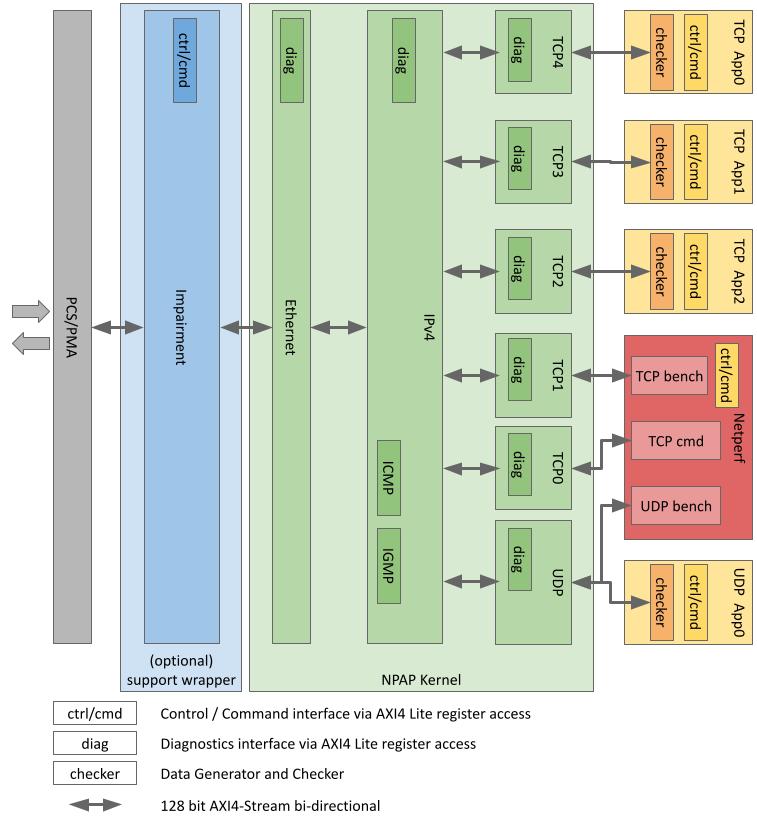

MLE’s Network Protocol Accelerator Platform (NPAP) for 1/2.5/5/10/25/40/50/100 Gigabit Ethernet is a TCP/UDP/IP network protocol Full-Accelerator subsystem which instantiates the standalone 128 bit TCP/IP Stack technology from German Fraunhofer Heinrich-Hertz-Institute (HHI).

This document provides an overview over the existing Evaluation Reference Designs (ERD) for NPAP available at MLE. Besides the technical details of the various FPGA boards supported and number of NPAP instances, this document provides information about how to connect to SFP/SFP+/QSFP, set up the LAN for IP port numbers, together with a short introduction to Netperf for bandwidth and latency benchmarking.

Contents

Important Legal Information

The information disclosed to you hereunder (the “Materials”) is provided solely for the selection and use of products from Missing Link Electronics, Inc. (MLE). To the maximum extent permitted by applicable law: (1) Materials are made available “AS IS” and with all faults, MLE hereby DISCLAIMS ALL WARRANTIES AND CONDITIONS, EXPRESS, IMPLIED, OR STATUTORY, INCLUDING BUT NOT LIMITED TO WARRANTIES OF MERCHANTABILITY, NON-INFRINGEMENT, OR FITNESS FOR ANY PARTICULAR PURPOSE; and (2) MLE shall not be liable (whether in contract or tort, including negligence, or under any other theory of liability) for any loss or damage of any kind or nature related to, arising under, or in connection with, the Materials (including your use of the Materials), including for any direct, indirect, special, incidental, or consequential loss or damage (including loss of data, profits, goodwill, or any type of loss or damage suffered as a result of any action brought by a third party) even if such damage or loss was reasonably foreseeable or MLE had been advised of the possibility of the same. MLE assumes no obligation to correct any errors contained in the Materials or to notify you of updates to the Materials or to product specifications. You may not reproduce, modify, distribute, or publicly display the Materials without prior written consent. Certain products are subject to the terms and conditions of MLE’s limited warranty, please refer to MLE’s License Agreement which can be viewed at https://www.missinglinkelectronics.com/us-license; IP cores may be subject to warranty and support terms contained in a license issued to you by MLE.

MLE PRODUCTS ARE NOT DESIGNED OR INTENDED TO BE FAIL-SAFE, OR FOR USE IN ANY APPLICATION REQUIRING FAIL-SAFE PERFORMANCE. CUSTOMER ASSUMES THE SOLE RISK AND LIABILITY OF ANY USE OF MLE PRODUCTS IN SUCH APPLICATIONS.

Copyright 2025 Missing Link Electronics, Inc. All trademarks are the property of their respective owners (please refer to https://www.missinglinkelectronics.com/resources/legal-notices/trademark-notices/ ).