1. Evaluation Reference Design Overview

MLE provides its IP-Cores usually as an Evaluation Reference Design (ERD) which not only allows to see how the implementation is done but also functions as a testplatform to evaluate the performance and functions of the IP. As NPAP is a highly configurable IP Core, MLE uses multiple designs with different configurations on multiple boards to cover all the functions NPAP offers. A single ERD can include multiple NPAP instances, each connected to a different SFP port. This approach is used to demonstrate different PHY attachment methods or to showcase higher throughput by running multiple instances in parallel.

1.1. Overview over Supported Development Boards

Below is a table of the many MLE NPAP Evaluation Reference Designs. Depending on the FPGA board we may have multiple, different Evaluation Reference Designs as a choice for evaluation. The table reflects NPAP Evaluation Reference Design version 3.4.6.

The Status column in the table indicates the current availability and development stage of each design:

Ready: The design is stable, tested, and available for customer evaluation upon request.

EOL: The design is available for internal testing and may be provided to partners for preliminary evaluation. However, the underlying hardware (i.e. FPGA board and/or FPGA device) was discontinued.

Early Access: The design is available for internal testing and may be provided to partners for preliminary evaluation.

In Development: The design is actively being worked on and is not yet available for testing.

Road Map: The design is planned for a future release, but active development has not yet started.

ERD Number |

Board / Device Family |

Testing Status |

NPAP Instance |

NPAP Speed |

MAC Clock |

Netperf |

TCP Cores |

UDP Cores |

IP Address |

SFP Port No |

|---|---|---|---|---|---|---|---|---|---|---|

ERD 0 |

AMD ZCU102 |

Ready |

1 |

1G |

Sync |

yes |

4 |

4 |

192.168.0.1 |

0 |

2 |

1G |

Async |

yes |

4 |

4 |

192.168.0.2 |

1 |

|||

ERD 1 |

AMD ZCU102 |

Ready |

1 |

10G |

Sync |

yes |

8 |

1 |

192.168.1.1 |

0 |

ERD 2 |

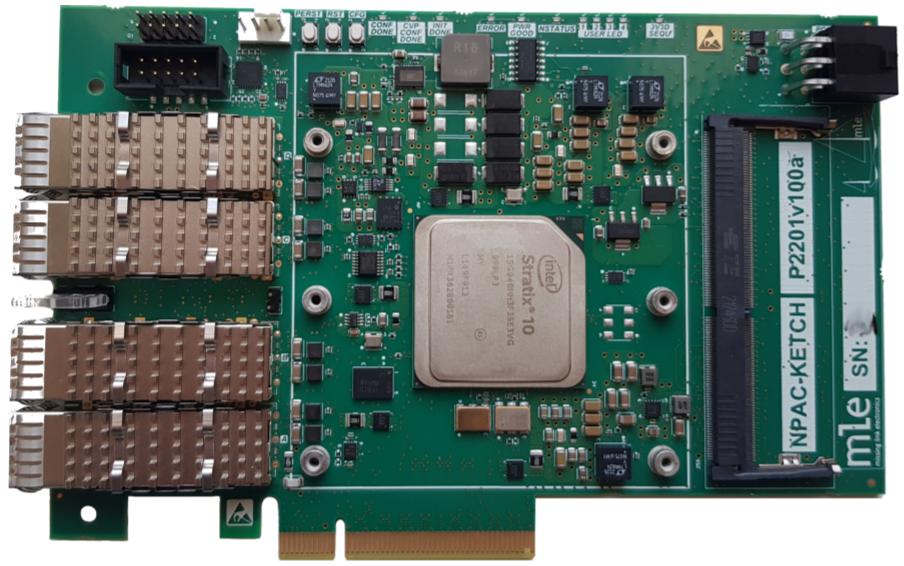

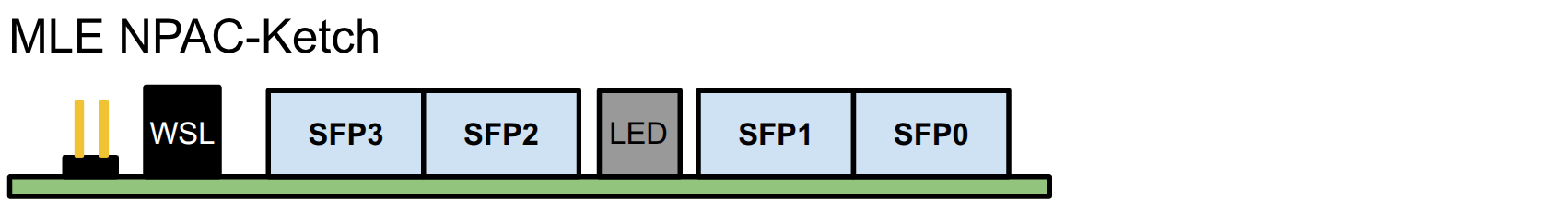

MLE NPAC-KETCH |

Ready |

1 |

10G |

Async |

yes |

1 |

1 |

192.168.2.1 |

0 |

2 |

10G |

Async |

yes |

1 |

1 |

192.168.2.2 |

1 |

|||

ERD 3 |

AMD ZCU111 |

Ready |

1 |

25G |

Async |

yes |

5 |

1 |

192.168.3.1 |

0 |

ERD 4 |

MLE NPAC-KETCH |

Road Map |

1 |

40G |

Async |

yes |

1 |

1 |

192.168.4.1 |

0,1,2,3 |

ERD 5 |

AMD ZCU111 |

Early Access |

1 |

100G |

Async |

yes |

3 |

1 |

192.168.5.1 |

0,1,2,3 |

ERD 7 |

Microchip MPF300 |

Early Access |

1 |

10G |

Sync |

yes |

3 |

2 |

192.168.7.1 |

0 |

ERD 8 |

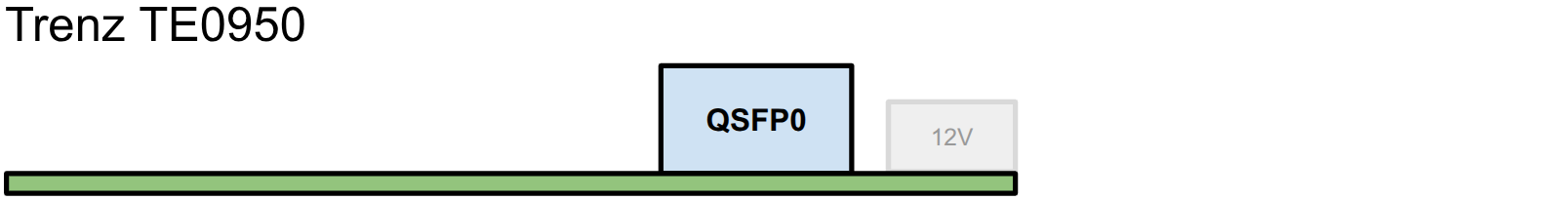

Trenz TE0950 |

Early Access |

1 |

25G |

Async |

yes |

2 |

1 |

192.168.8.1 |

QSFP0:0 |

ERD 10 |

AMD Alveo U200 |

EOL |

1 |

25G |

Async |

yes |

5 |

2 |

192.168.10.1 |

QSFP1:0 |

2 |

25G |

Async |

yes |

5 |

2 |

192.168.10.2 |

QSFP1:1 |

|||

ERD 11 |

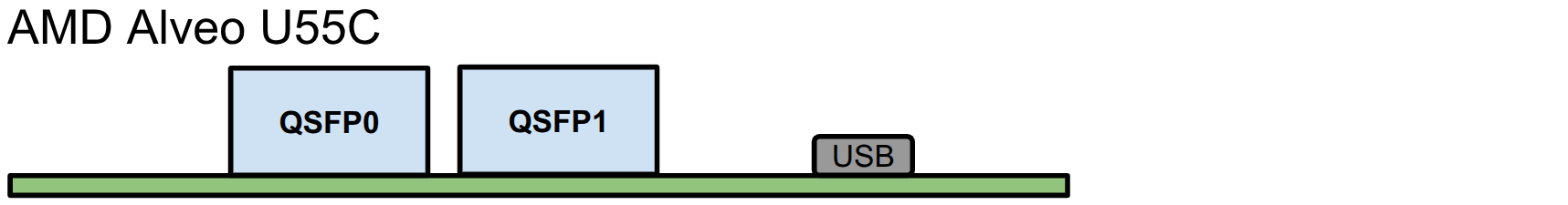

AMD Alveo U55C |

Early Access |

1 |

25G |

Async |

yes |

1 |

1 |

192.168.11.1 |

QSFP0:0 |

2 |

25G |

Async |

yes |

1 |

1 |

192.168.11.2 |

QSFP0:1 |

|||

3 |

25G |

Async |

yes |

1 |

1 |

192.168.11.3 |

QSFP0:2 |

|||

4 |

25G |

Async |

yes |

1 |

1 |

192.168.11.4 |

QSFP0:3 |

|||

ERD 12 |

Lattice Versa-G |

Early Access |

1 |

25G |

Async |

yes |

1 |

1 |

192.168.12.1 |

0 |

ERD 13 |

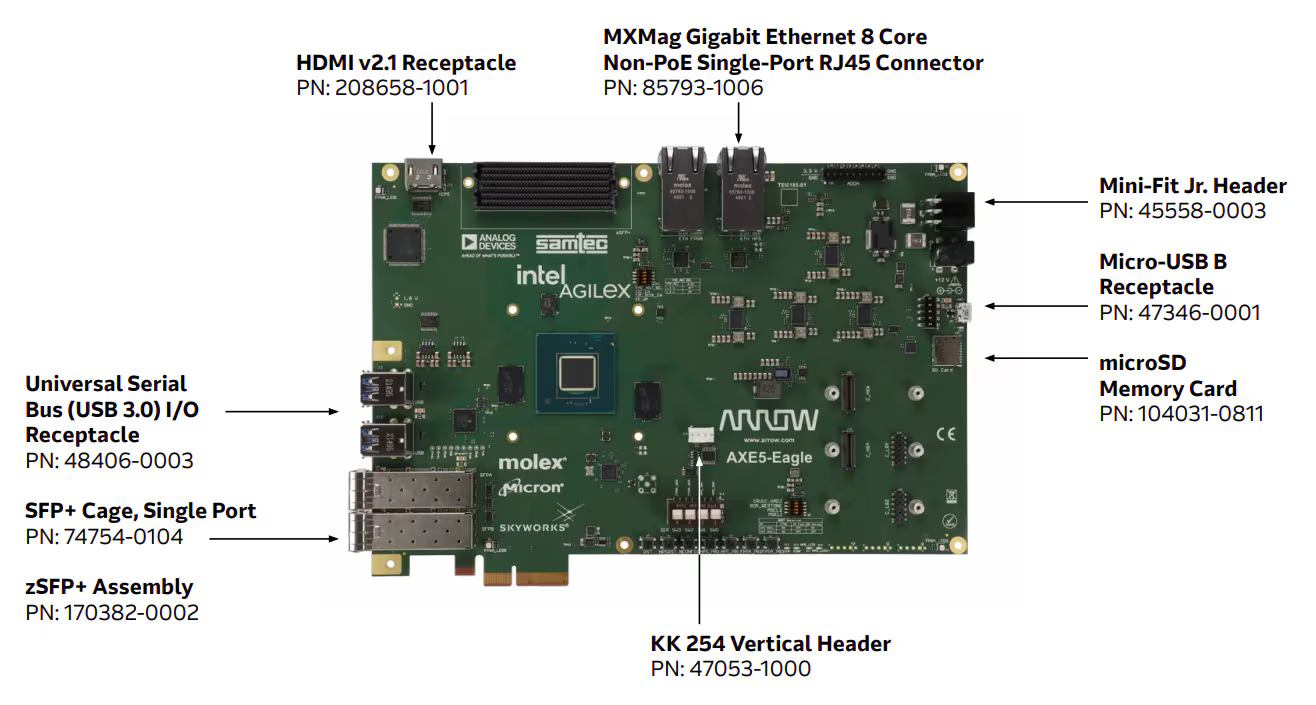

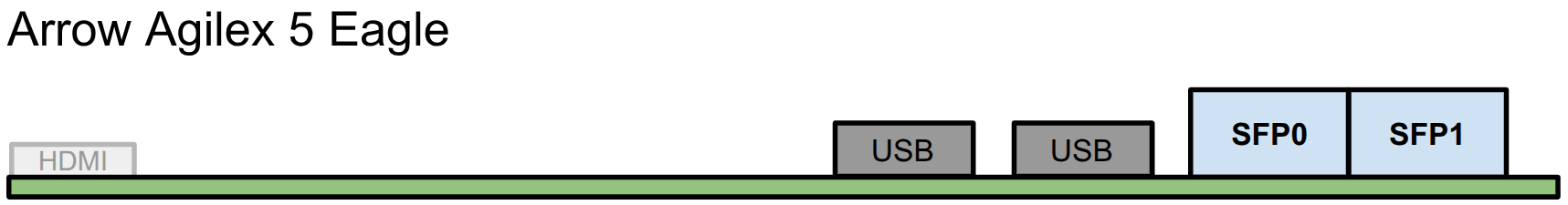

Arrow Agilex 5E |

Early Access |

1 |

25G |

Async |

yes |

5 |

3 |

192.168.13.1 |

0 |

ERD 14 |

AMD ZCU111 |

Early Access |

1 |

10G |

Async |

yes |

5 |

1 |

192.168.14.1 |

0 |

ERD 15 |

Avnet Tria AUB15P |

In Development |

1 |

10G |

Async |

yes |

1 |

1 |

192.168.15.1 |

0 |

ERD 101 |

AMD ZCU102 |

Road Map |

1 |

10G |

Sync |

no |

0 |

5 |

192.168.101.1 |

0 |

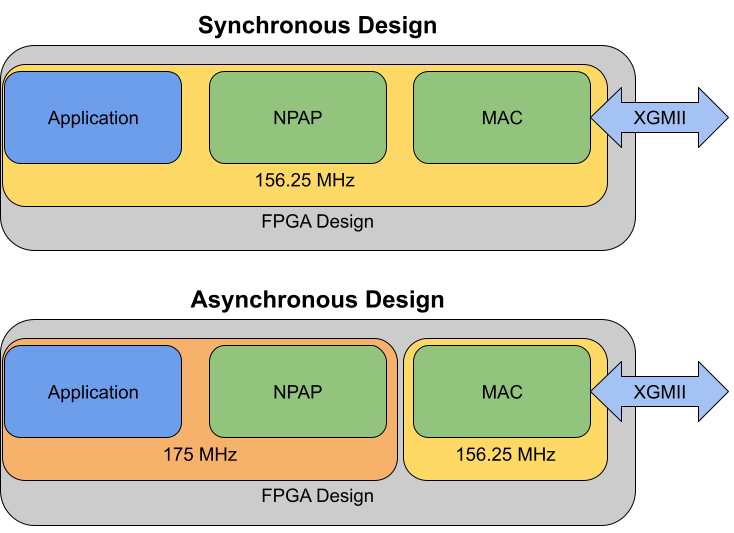

1.2. Synchronous vs Asynchronous MAC

The image below shows the difference between a synchonous Ethernet MAC and an asynchronous Ethernet MAC design.

The synchronous Ethernet MAC design uses the same clock for NPAP and for the Ethernet MAC (here both are 156.25 MHz). The asynchronous Ethernet MAC design uses a different clock for NPAP (here 175 MHz) than for the Ethernet MAC (here 156.25 MHz).

1.3. SPF Port Location

When we refer to SFP we obviously also mean SFP+ or QSFP+ or QSFP28 etc.

1.3.1. Overview MLE NPAC-KETCH:

Figure 1.1 Source: Missing Link Electronics website

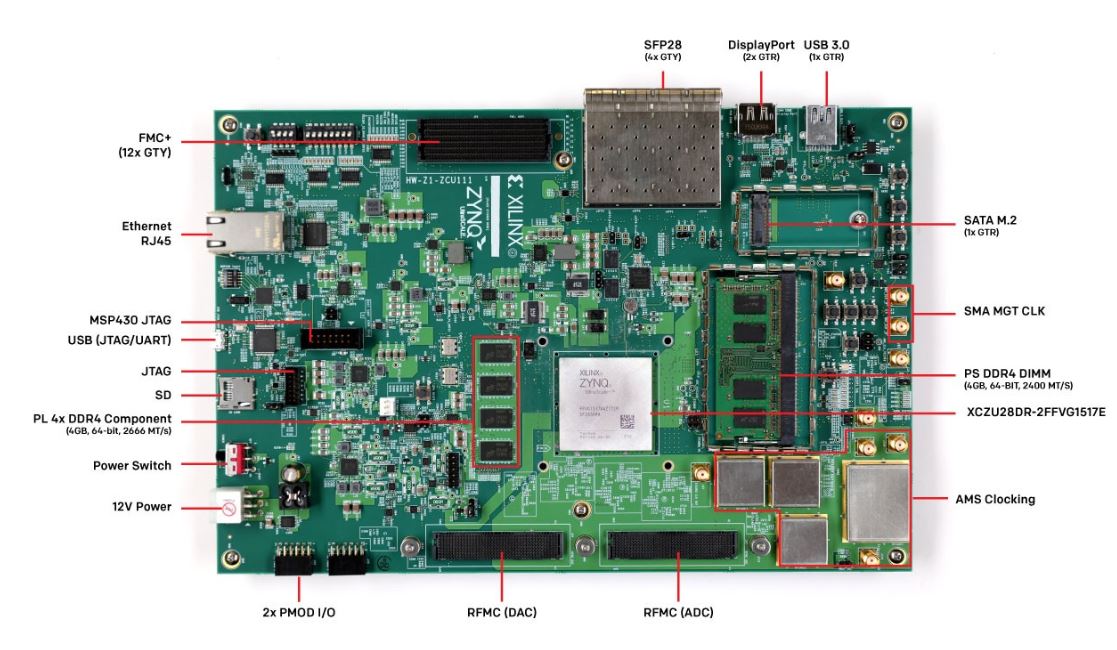

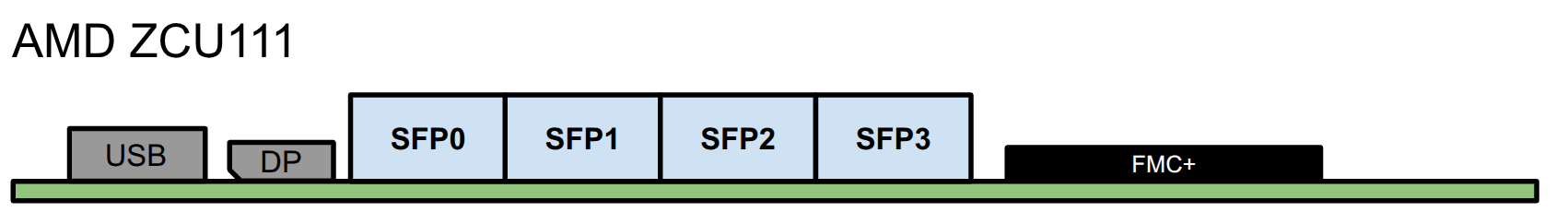

1.3.2. Overview AMD ZCU111:

Figure 1.2 Source: AMD ZCU111 Product Page

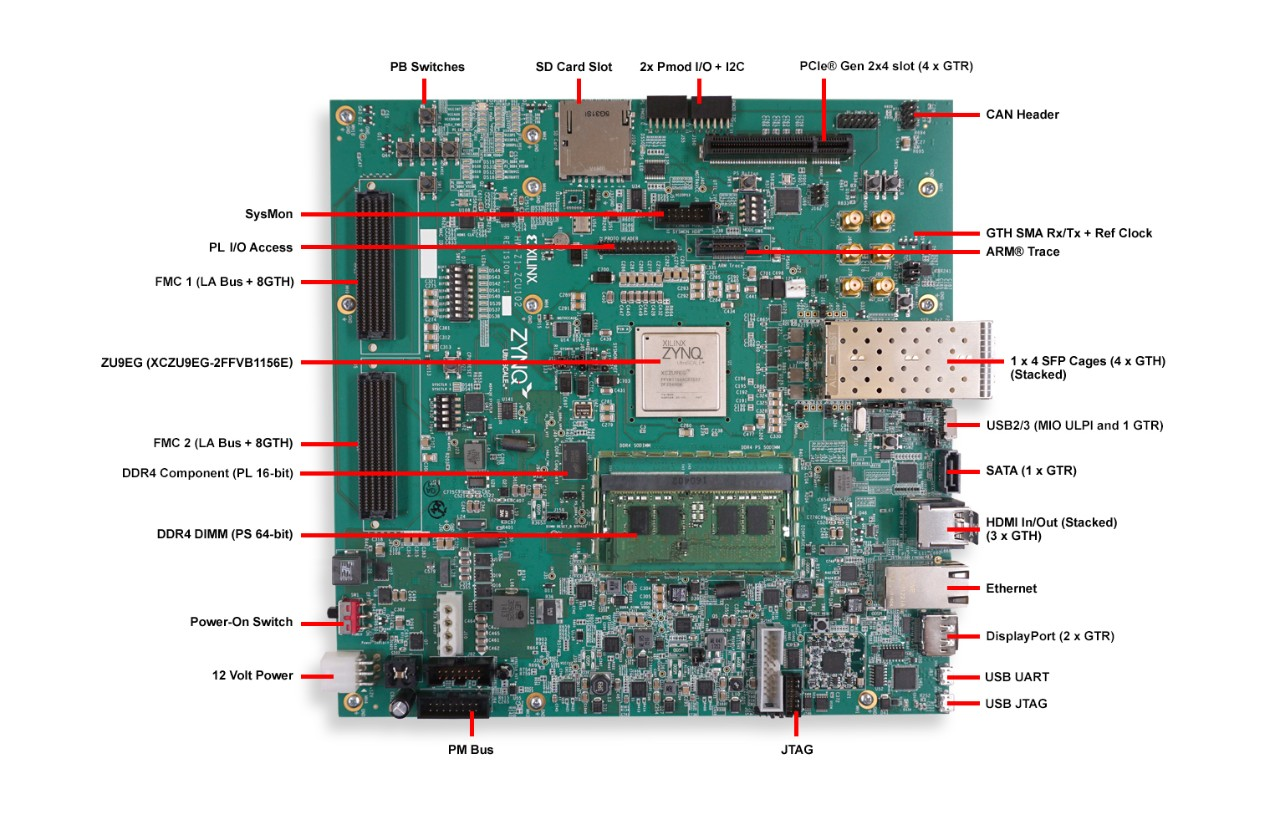

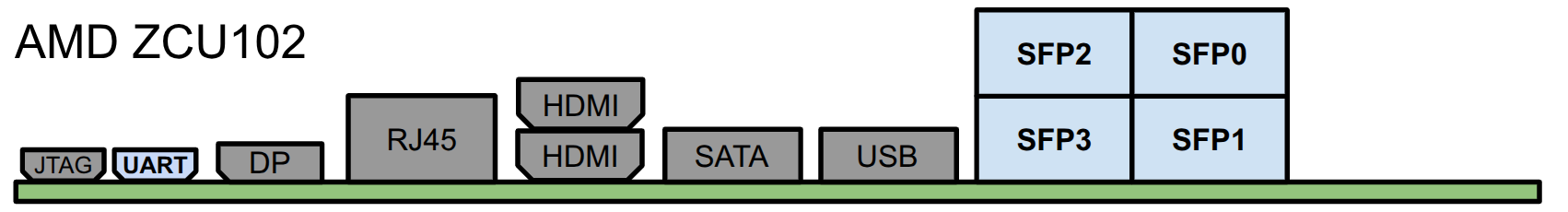

1.3.3. Overview AMD ZCU102:

Figure 1.3 Source: AMD ZCU102 Product Page

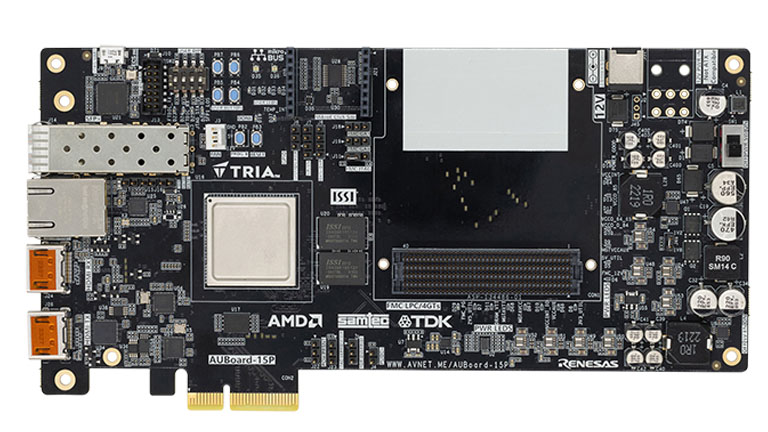

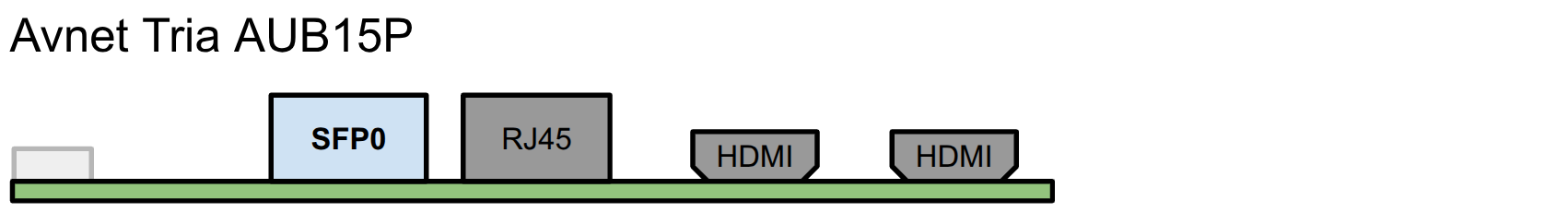

1.3.4. Overview Avnet Tria AUB15P:

Figure 1.4 Source: Avnet Tria AUB15P Product Page

1.3.5. Overview AMD Alveo U200:

Figure 1.5 Source: AMD Alveo U200 Product Page

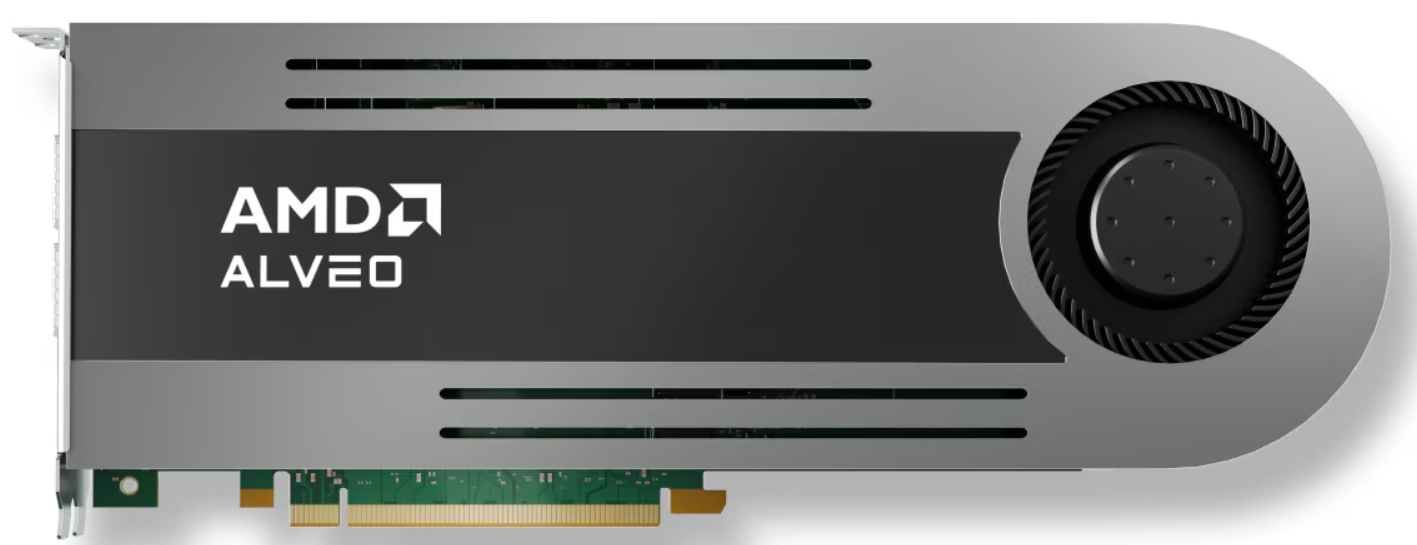

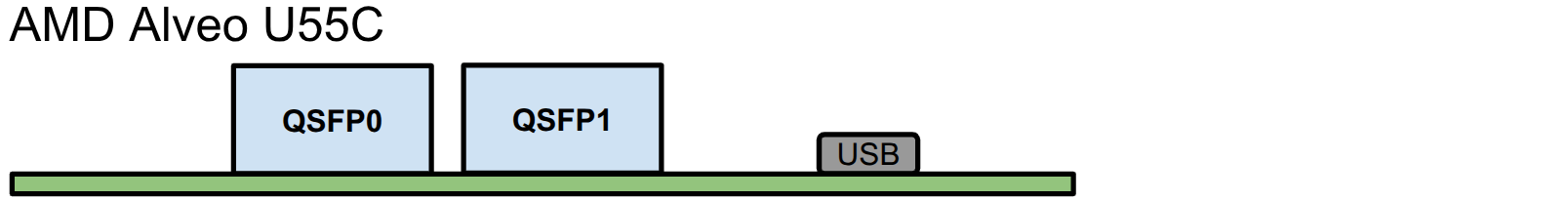

1.3.6. Overview AMD Alveo U55C:

Figure 1.6 Source: AMD Alveo U55C Product Page

1.3.7. Overview Arrow Agilex 5 Eagle:

Figure 1.7 Source: Arrow Agilex 5 Eagle Product Page

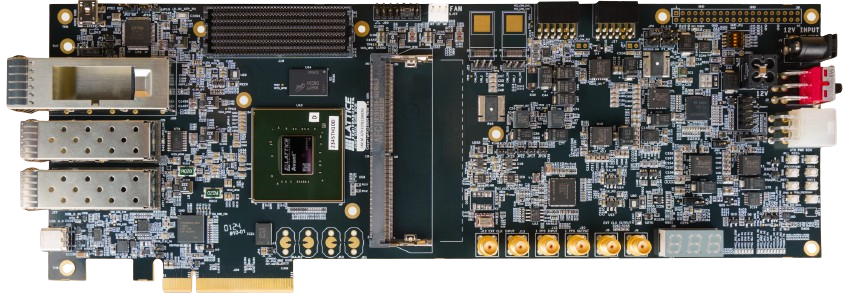

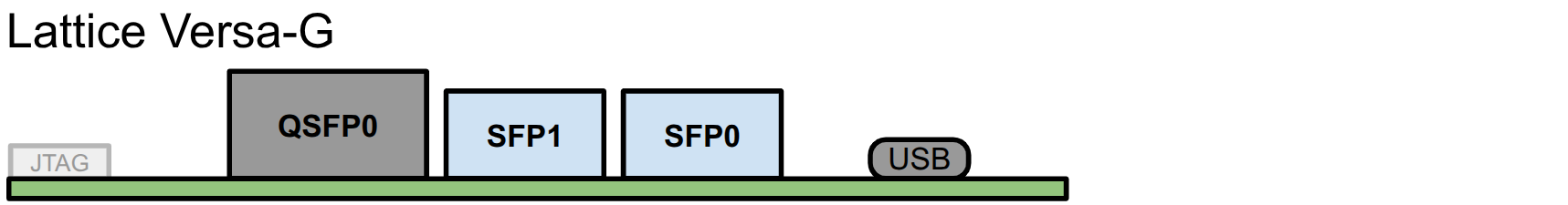

1.3.8. Overview Lattice Versa-G:

Figure 1.8 Source: Lattice Versa-G Product Page

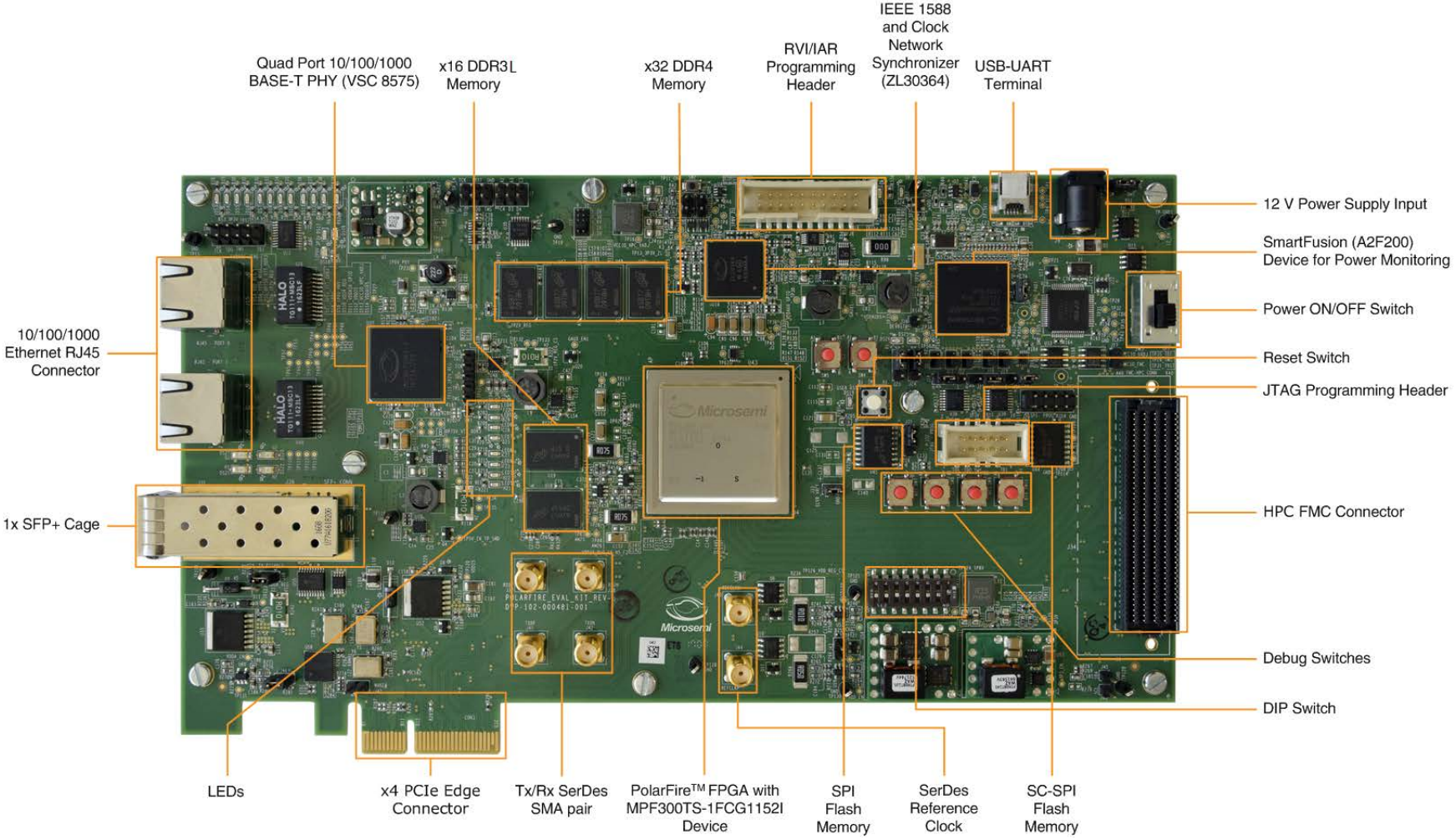

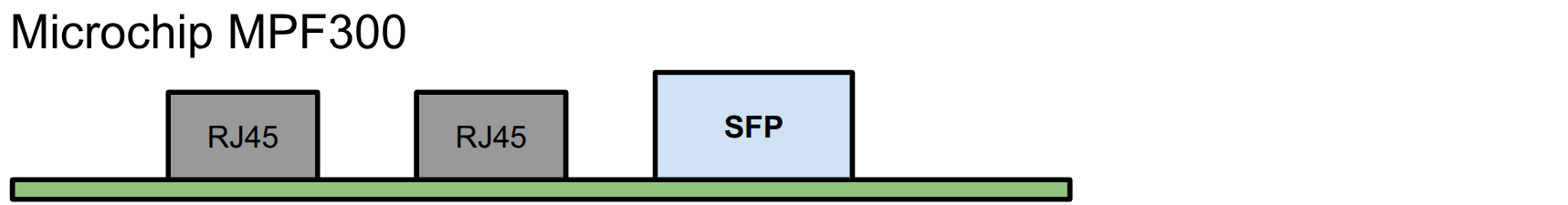

1.3.9. Overview Microchip MPF300:

Figure 1.9 Source: Mouser Electronics Microchip PolarFire Evaluation Kit Product Page

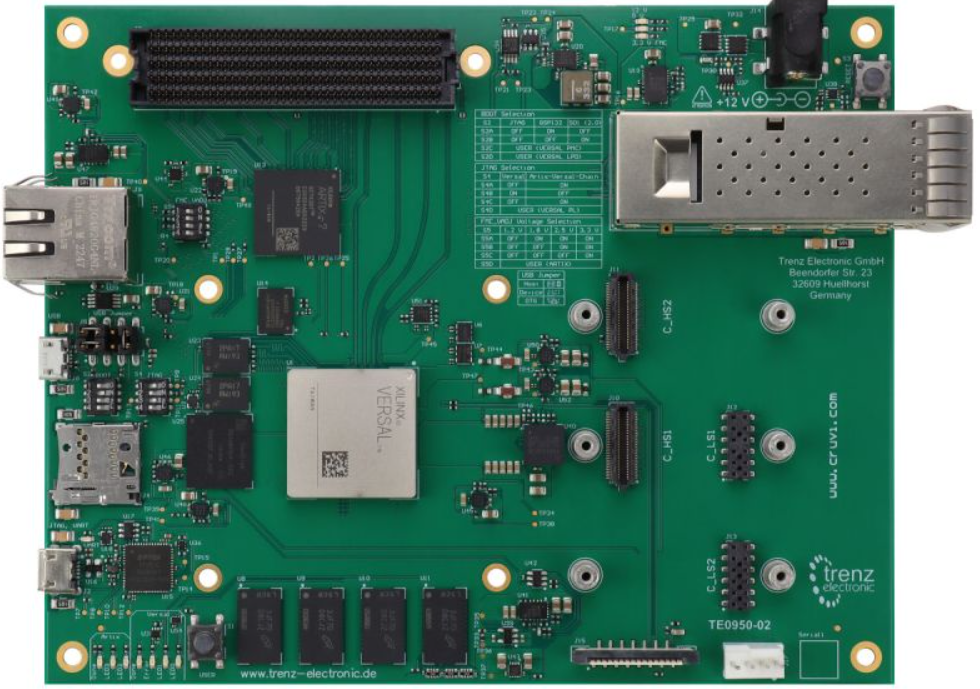

1.3.10. Overview Trenz TE0950:

Figure 1.10 Source: AMD Versal AI Edge Evalboard mit VE2302 device Product Page