NPAP-10G Remote Evaluation

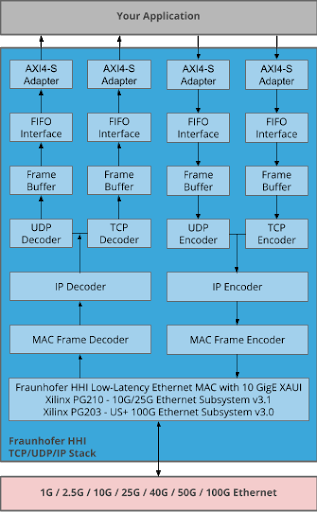

Try out MLE’s Network Protocol Accelerator Platform based on the TCP/UDP/IP Full Accelerator from German Fraunhofer Heinrich-Hertz-Institute!

The MLE-NPAP Remote Evaluation will give you first hands on experience of the performance and usability of a TCP/UDP full accelerator. The advantage of MLE NPAP is the standalone capability of the stack, which means no CPU is required to send and receive data. This allows data rates near line rate.

With this Evaluation you will see results of following tasks:

- PC to NPAP performance (PC initiator -> stream)

- NPAP to PC performance (PC receiver -> maerts)

- Performance with and without PC NIC enhancements

- Discussion of performance results

Requirements

Before you start the NPAP Remote Evaluation please get in touch with MLE and also read this document to the end.

After contacting MLE you will receive following items:

- Username

- Password

- Timeslot

This ensures that you have exclusive access to the hardware.

Demo Setup

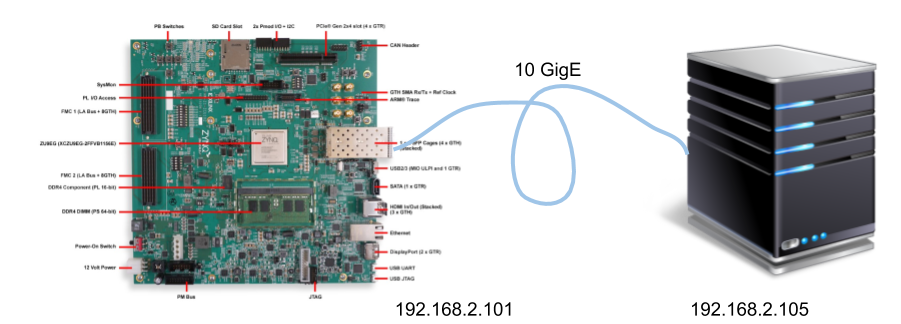

The Image below shows the hardware setup in our Lab.

The PC provides a 10GigE connection to the Xilinx ZCU102 board. Furthermore, the PC provides tools for performance analysis, like NetPerf.

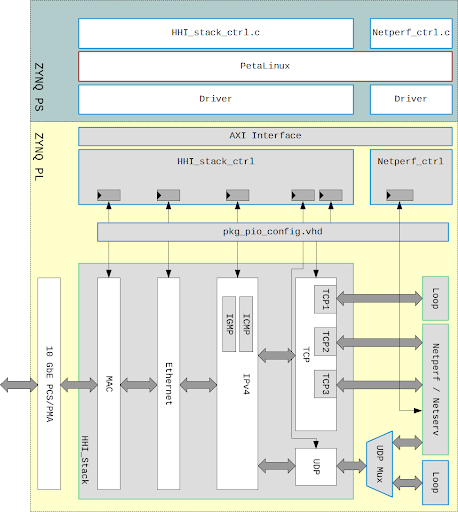

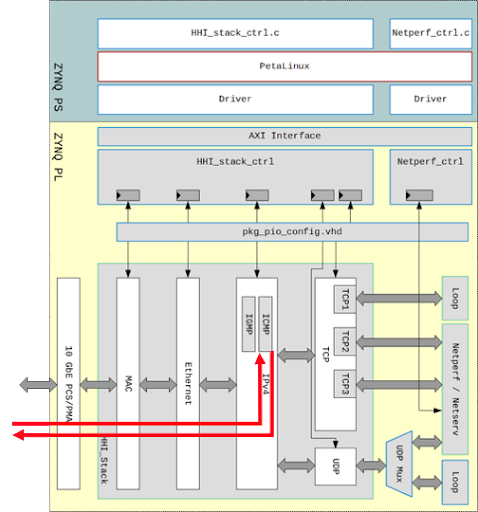

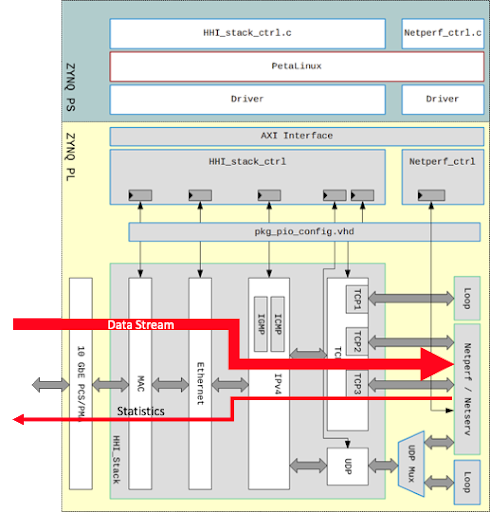

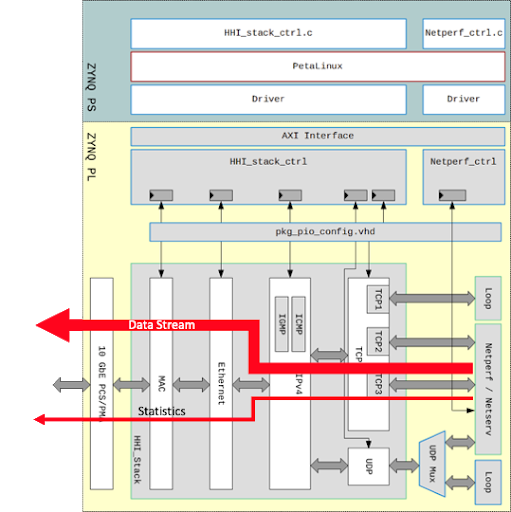

The ZCU102 runs an implementation of MLE NPAP 10G. The block diagram is shown below:

The Blockdiagram shows the full implementation on a Zynq US+ Device. The bold horizontal arrows indicate the high throughput data path. The thin vertical arrows show the control and configuration interfaces.

The Stack itself can be seen as two bidirectional lanes for TCP and UDP:

The number of simultaneous sessions depends on instantiated TCP blocks. In the diagram above show one TCP and one UDP session.

Demo Walk Through

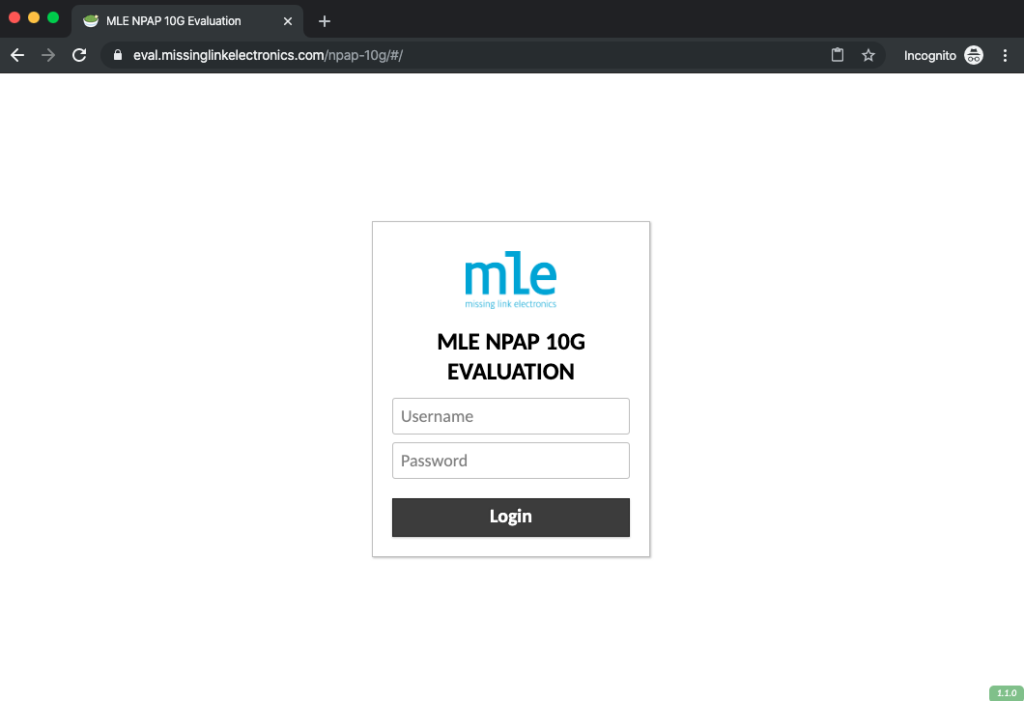

Login

Please connect to: https://eval.missinglinkelectronics.com/npap-10g

and use the provided login data.

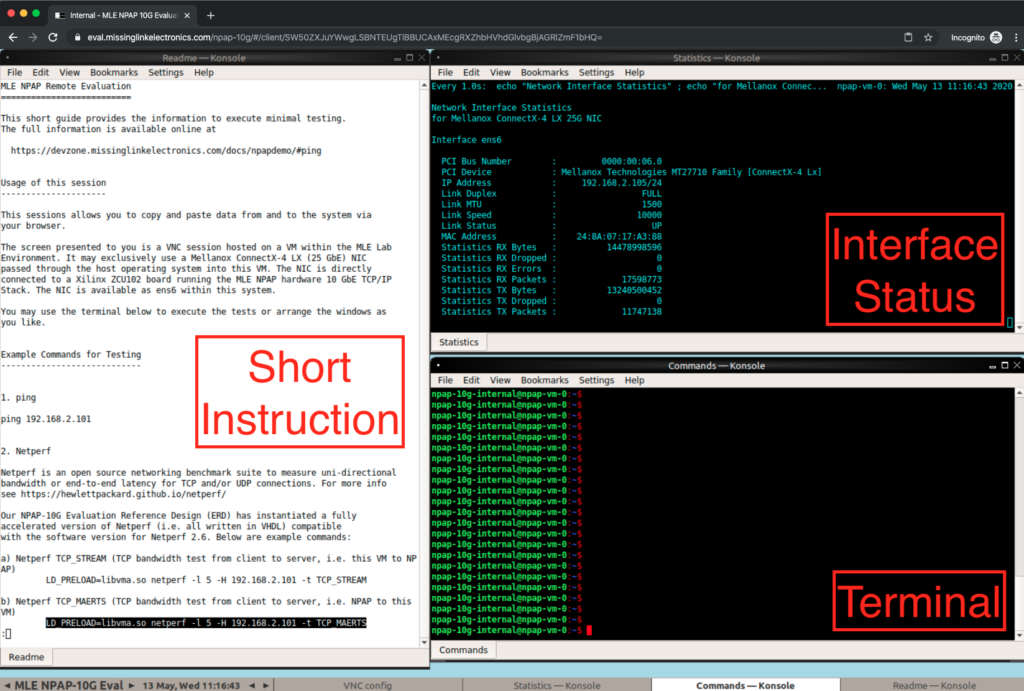

Desktop Overview

After login the screen will show a desktop with 3 windows:

- Short Instruction

- An Interface Status which polls connectivity statistics

- Terminal to run commands

Short Introduction:

This section provides you with some basic commands for first initial testing like Ping and Stream from and to NPAP.

Interface Status Window:

This window provides the status of the network interface of the PC, like IP address, half/full duplex mode, packed size, link speed, link status, MAC address, and send/receive statistics.

The Terminal Window:

In this window you can execute commands and run tests.

And yes, this is an initial setup of your screen, so feel free to change the window alignment or add windows. Keep in mind that we gave you access to something that you can consider “Your” Virtual Machine via a Remote Desktop. Within reason you can store data, keep notes etc. While we cannot guarantee persistency

Run The Commands

a. Ping

Copy the command:

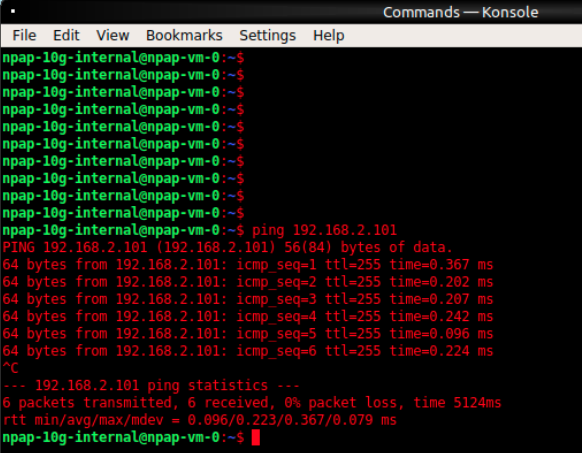

ping 192.168.2.101to the Terminal and push return. To abort ping, press “ctrl + c”.

The Terminal should look like the following screenshot:

With ping, the PC sends a ICMP echo request (ping, ICMP jacket type 8) to the NPAP implementation on the ZCU102 Board. NPAP sends an echo reply (called pong, ICMP packet type 0) back to the PC.

As TTL (Time To Live) isn’t decreased you can assume a direct connection between board and PC.

The round trip time includes runtime over wire and also processing time of sender and receiver.

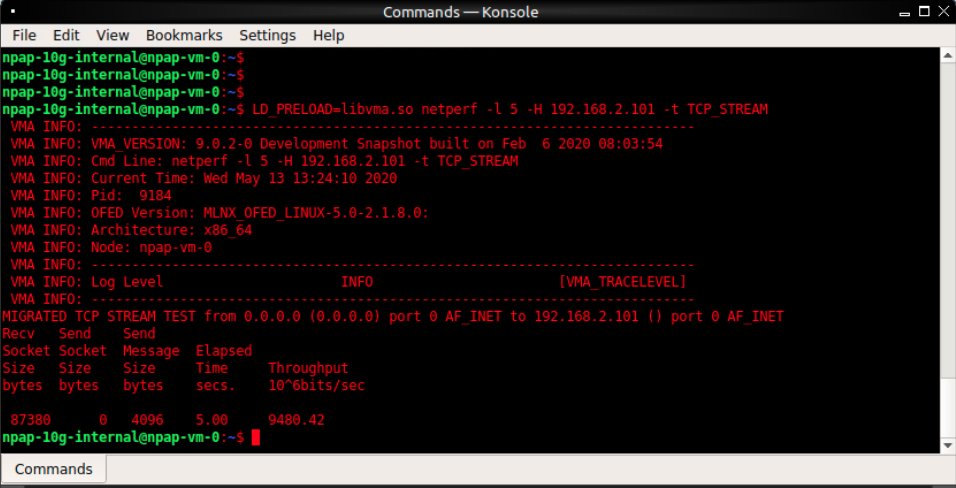

b. NetPerf TCP_STREAM

With the command

LD_PRELOAD=libvma.so netperf -l 5 -H 192.168.2.101 -t TCP_STREAMa data stream from NetPerf (installed on the PC) is sent to the NetPerf instantiation on the ZCU102 (behind NPAP).

The results should look like this:

The results show the transfer of 4096Bytes happened in 5sec which led to a transfer rate of 9,480Gbit/s.

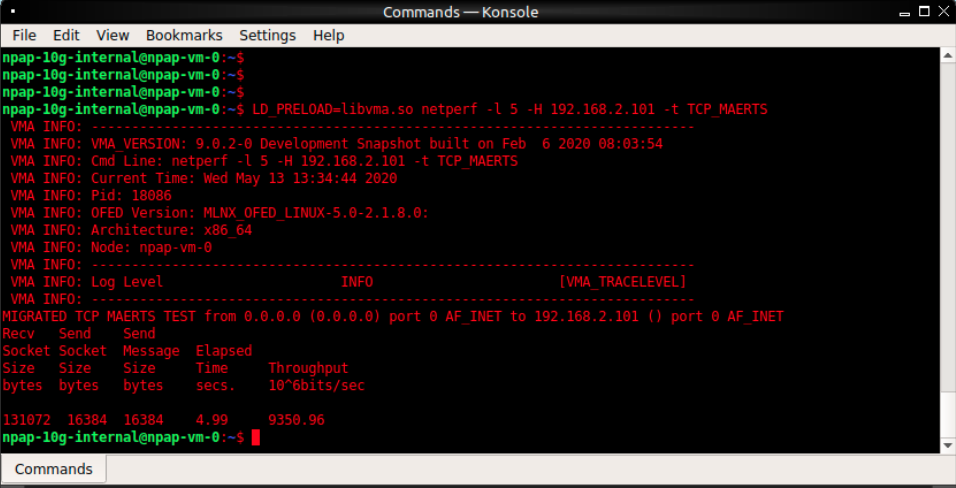

c. Netperf TCP_MAERTS

As maerts indicates (stream read backwards) it is the opposite direction of the stream command. With the command:

LD_PRELOAD=libvma.so netperf -l 5 -H 192.168.2.101 -t TCP_MAERTSa data stream from the NetPerf instantiation on the ZCU102 (behind NPAP) is sent to the NetPerf (installed on the PC).

The results should look like this:

The results show the transfer of 4096Bytes happened in 4,99sec which led to a transfer rate of 9,350Gbit/s.

d. Discussion of the Results

Assumption: the hardware implementation is faster then software.

The TCP_STREAM (b.) shows a faster connection from PC to NPAP, then the TCP_MAERTS (c.) from NPAP to PC.

In test b. the PC can run as fast as possible and NPAP is always ready to receive data.

In test c. NPAP provides more data than the PC can process. Due to this, the amount of data is continuously controlled, due to this the data rate is slower.

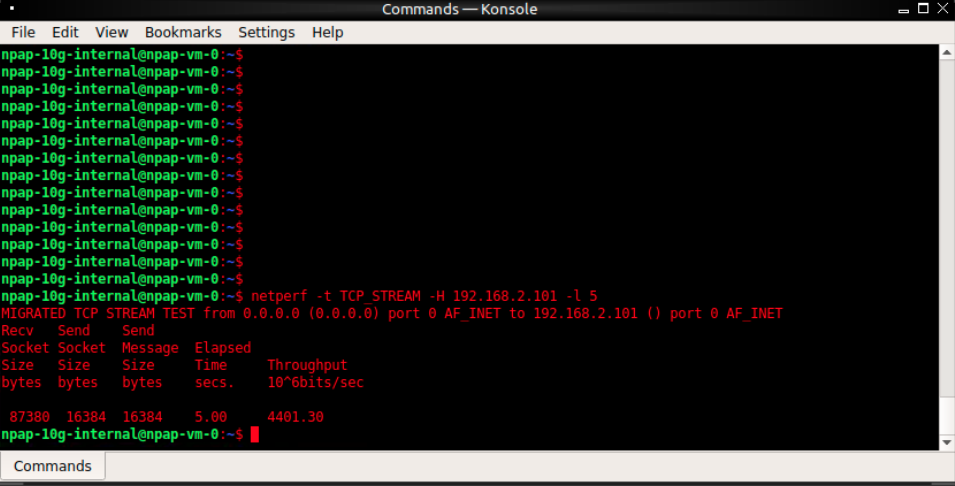

Impact of PC Setup on Performance

In the previous tests, the NIC in the PC was configured close to optimum. A misconfiguration can have a huge impact on performance. This can also be tested on the remote setup by dismissing the preload option of NetPerf. a huge impact can be the drivers. Linux standard drivers are not capable of NIC specific optimizations.

Mellanox’s Messaging Accelerator (VMA) boosts performance for message-based and streaming applications, for this the eval uses the libVMA. By dismissing this library, the standard linux drivers are used.

a. Without LibVMA

LibVMA allows to use the hardware functions of the NIC, by ignoring this library, Linux uses its standard configuration. The following command runs Netperf without libVMA:

netperf -t TCP_STREAM -H 192.168.2.101 -l 5Result:

The performance of the connection dropped to 4,401Gbit/s.

b. With optimizations

To have a closer look at the LibVMA, lets run a more detailed command:

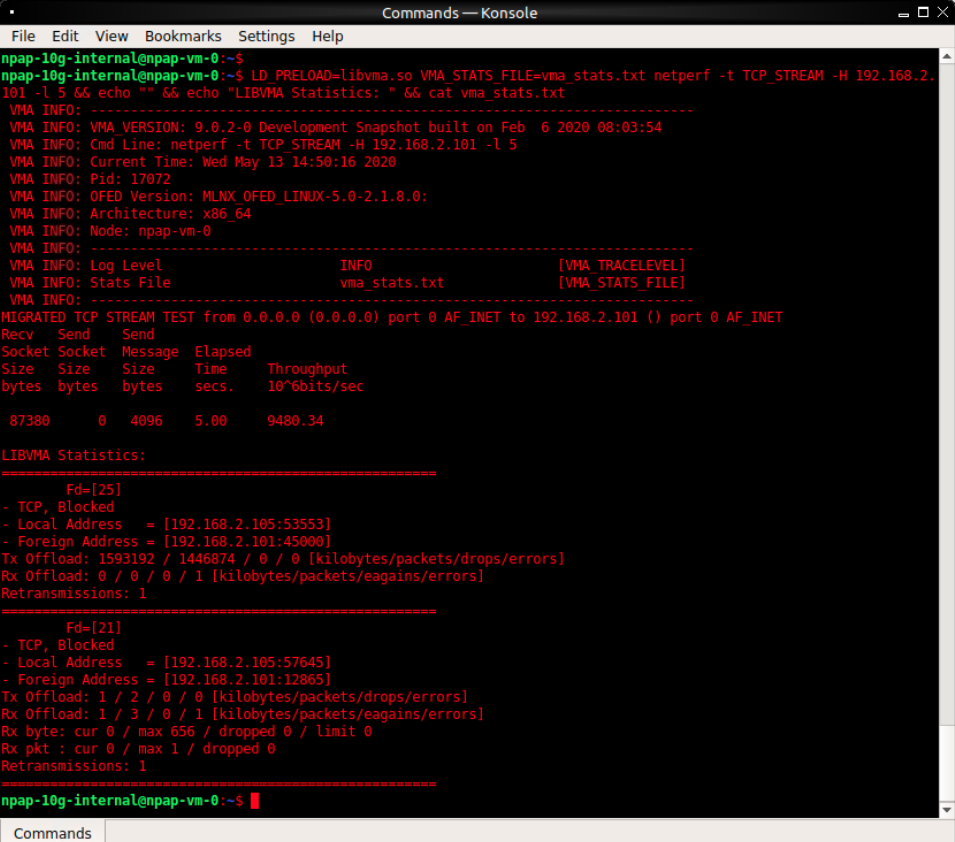

LD_PRELOAD=libvma.so VMA_STATS_FILE=vma_stats.txt netperf -t TCP_STREAM -H 192.168.2.101 -l 5 && echo "" && echo "LIBVMA Statistics: " && cat vma_stats.txtResult:

This command provides more statistics provided by the LibVMA and a much higher data rate of 9,480Gbit/s.

🌐 www.missinglinkelectronics.com

MLE (Missing Link Electronics) is offering technologies and solutions for Domain-Specific Architectures, which focus on heterogeneous computing using FPGAs. MLE is headquartered in Silicon Valley with offices in Neu-Ulm and Berlin, Germany.